Pipeline - Dealing with Errors

Introduction

Dealing with errors is very important during any pipeline. Although I haven't had all the scripts ready, I need to consider the potential errors and take action early.

This section contains initial error processing for two parts: downloading and analysis.

Downloading

For download, I can think of two kinds of errors. One is that the file is good, but it was damaged during transmission. The other one is that the file was downloaded without error, but the content on the remote server is invalid.

Error While Transfering

Since there is no MD5 or similar hash provided, I have to find another way to validate the file. All the source is in .fastq.gz format, which means they are .gz archive. The best way to examine an archive is to decompress it and see if any error occurs.

If any archive fails to decompress correctly, the file will be added to the redownload list. The only downside of this method is that, if the source on the remote server is a damaged archive, then it will end in a download loop. In order to prevent this issue, I plan to set a maximum re-downloading limit. If any file was downloaded for over 3 times and still reports an error, it should be removed from the list.

Error with Source

There are two kinds of errors in this category. One is a damaged fastq file, which we won't be able to detect until it enters the analysis pipeline, so we will leave it to the analysis pipeline to take care of.

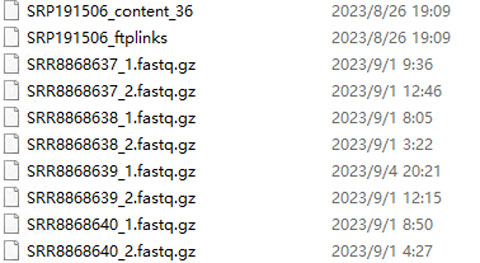

The other one is an unpaired file. For an interleaved-ended source, it requires two files in a similar format (XXXX_1.fastq.gz and XXXX_2.fastq.gz). If one of the two files is missing or damaged, both file needs to be removed from the list. This can be easily detected by script.

Analysis

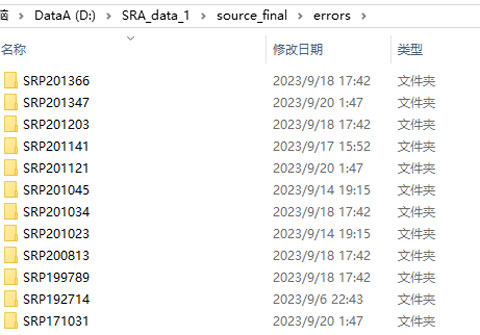

I can think of two kinds of errors for this part. One is that the resulting output is invalid, and the other one is that the analysis pipeline was not complete. For the first one, where the result is invalid, I plan to find those invalid entries, remove them, and process the remaining file(s) again. For the second error, where the analysis pipeline was not complete, I plan to move them to a specific location for manual processing. Once there are some samples, I will be able to modify the analysis pipeline specifically for these errors.