Preliminary - Hardware and the Future

Introduction

Once we have assigned the task to each server, it's time to decide the configuration of each server. While deciding the configuration, the future usage of the hardware after the project and component generality also need to be considered.

This section has seven components: platform, general server, analysis server, compression server, tape library, storage server, and network.

Platform

The platform here refers to the server model without CPU, storage, and memory. Although the storage and memory (if same generation) devices are likely to be interchangeable with each other, other components like CPU or riser cards are unlikely to be interchangeable. In that case, we need to have as few platforms as possible. At the same time, we should use interchangeable components like the same generation memory as much as possible.

For the purpose of the project, we should have at least a storage model, a high-performance analysis model, and a general/compression model.

Storage Model

For storage, I have two options. The first one is a dedicated storage server that has up to 60 * 3.5 HDD slots. The other one is a conventional model that has up to 12 * 3.5 HDD slots.

Based on my past experience and also the cost, I choose to go with the conventional model. The dedicated storage server requires a powerful RAID card and Network card. Also, putting all the data on the same server is risky in case of a server failture. In addition, the conventional model provides more PCIe slots, which provide better expandability, this will benefit the future usage of the server after the project is finished.

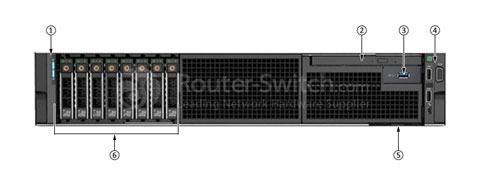

After considering the cost of different models, the HP DL380G9 server was selected.

Analysis Model

Based on the previous estimations, we need about ~50 physical cores per server. Considering the cost of the latest generation CPU, I choose to maximize the cores of each CPU.

DELL R740 was selected after careful consideration. This server model is capable of supporting 2 Intel Xeon Platinum 8200 series CPUs each up to 205W TDP. This generation CPU has a core count of up to 26, which makes it a better choice than the previous generation where each CPU has only a maximum of 18 cores. R740 has several submodels, Considering the cost, I chose the one with an 8 * 2.5 SSD drive bay.

General Model

A general-purpose server doesn't need to be powerful like the analysis server. A previous-generation server like DELL R630 should suffice.

Compression Model

Based on previous estimations, 48 logical cores are enough for our compression server. For simplicity, we use the same model as the general server.

Conclusion

In the end, we have HP 380 G9 as our storage server, DELL R740 as the analysis server, and DELL R630 as the general and compression server. All the servers use DDR4 memory, which provides an additional benefit for the future.

Storage Server

An HP DL380G9 has up to 12 * 3.5 HDD slots. Since I wasn't able to find the OS SSD module for this model, I can only use 11 slots for hard drives.

CPU

Storage doesn't usually have a high CPU requirement but should require less energy when possible. Therefore, I could choose to have an energy-efficient CPU. However, our storage servers are still conventional servers, which means I have to consider their usage of it afterward. Therefore, I choose E5-2650V4 as the storage CPU.

Memory

A storage server has little requirement for memory capacity. Since all servers use DDR4, I chose to pick two 16G modules for this server.

HDD

I have two options for HDD: WD HC320 8TB drive and WD HC530 14TB Helium drive. Both drives have a similar cost per TB, and using HC530 can increase the capacity of a single server. Unlike large companies or data centers, we don't want to replace the hard drive immediately after its warranty expiration. A helium drive risks helium leakage in the long term run, also, helium makes data recovery harder once needed. Therefore, I choose to go with HC320.

SSD

I chose to have an SSD as the OS drive for this server. Since there is no high requirement for OS drives, I choose to have a 480G Micron 5200 ECO.

The configuration of the storage server is as follows:

| Model | CPU | CPU Spec | Memory Spec | HDD Spec | HDD Count | SSD Spec | SSD Count |

|---|---|---|---|---|---|---|---|

| HP DL380G9 | Dual Intel Xeon E5-2650V4 | 12 * 2.2-2.9G Core per CPU | 2 * 16G DDR4 @2133Mhz | WD HC320 8TB | 11 | Micron 5200 ECO 480G | 1 |

Due to the factors that are out of the scope of this project, I decided to have 4 storages each equipped with 11 * 8TB HC320 drives.

Compression Server

CPU

Compression are CPU-intensive task, and core counts are crucial. Therefore, I chose to have the same E5-2650V4 CPU as the storage server.

Memory

Based on the previous experiments, 7z requires ~16 GB of memory while compressing using 48 cores. Therefore, 2 * 16 GB memory should be more than enough. Although I have 32 GB modules available, it is better that each CPU can have a least one DIMM module. Otherwise, a cross-CPU access could be very costly.

SSD

A DELL R630 supports 6 * 2.5 drives. Since a compression server needs to read and write the data simultaneously, SSDs are required. Given the performance requirement, SSD has to be durable enough and has a relatively higher capacity. However, considering the cost and data on the compression server is less crucial (the source won't be deleted from storage before finishing compression). Therefore, the cheaper Micron 5200 ECO 480G SSD has been selected.

| Model | CPU | CPU Spec | Memory Spec | HDD Spec | HDD Count | SSD Spec | SSD Count |

|---|---|---|---|---|---|---|---|

| DELL R630 | Dual Intel Xeon E5-2650V4 | 12 * 2.2-2.9G Core per CPU | 2 * 16G DDR4 @2133Mhz | N/A | 0 | Micron 5200 ECO 480G | 6 |

General Server

CPU

Unlike a compression server, users often perform various actions on the general server. Some of those applications might be sensitive to single-core performance. Therefore, I chose to have two Intel Xeon E5-2640V4 as the CPU. This CPU has a fewer(2 less) core than the 2650V4 model but has a higher base frequency.

Memory

None of the tasks are memory-heavy, so a convention 2 * 16 GB should suffice. Although I have 32 GB modules available, it is better that each CPU can have a least one DIMM module. Otherwise, a cross-CPU access could be very costly.

SSD

The general server needs to download the data and perform many other tasks. Therefore, the disk space needs to be able to accommodate at least one batch of data (~700GB). Also, since we will be validating the data on this server once downloaded, and the source file is gz archive, we need to prepare for the temporary files after decompression. Although it is unlikely for us to decompress all 700GB at the same time, it is safe to have at least 2 times more space available (at least 2100GB), as the decompressed file could be 3-7 times larger than the original.

Due to the similar workload as the compression server, I chose to have the same SSD configuration: 6 * 5200 ECO 480G. This can provide over 2500GB of space and is more than enough for our needs.

| Model | CPU | CPU Spec | Memory Spec | HDD Spec | HDD Count | SSD Spec | SSD Count |

|---|---|---|---|---|---|---|---|

| DELL R630 | Dual Intel Xeon E5-2640V4 | 10 * 2.4-3.4G Core per CPU | 2 * 16G DDR4 @2133Mhz | N/A | 0 | Micron 5200 ECO 480G | 6 |

Analysis Server

CPU

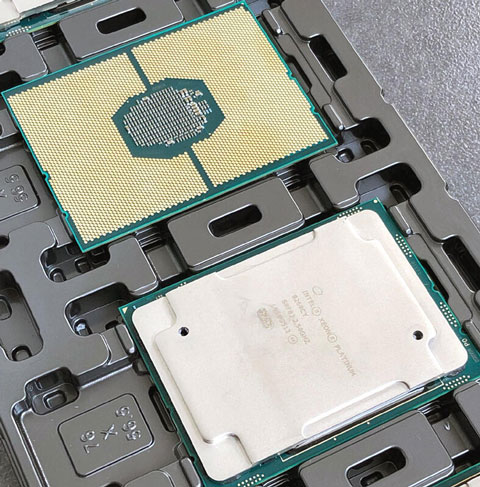

After considering the available options, I have found the following options. Except for 8275CL, which is slightly more expensive, all other models have similar costs.

| Series | Model | Core Counts | Base Frequency | All Core Turbo | Single Core Turbo | TDP |

|---|---|---|---|---|---|---|

| Intel Xeon Platinum | 8269CY | 26C52T / 20C40T | 2.5G/3.1G | 3.2G/3.5G | 3.8G/3.8G | 205W |

| Intel Xeon Gold | 6253CL | 18C/36T | 3.1G | 3.7G | 3.9G | 205W |

| Intel Xeon Platinum | 8275CL | 24C48T | 3.0G | 3.6G | 3.9G | 240W |

| Intel Xeon Platinum | 8255C | 24C48T | 2.5G | 3.1G | 3.9G | 165W |

Apparently, 8275CL should win for its performance. However, 8275CL is an OEM model that has a 240W TDP requirement. The official supported TDP for all retail servers I could find is 205W TDP. Although it is common for servers to have some redundancy, I couldn't risk failing the entire system. Therefore, 8269CY was selected. This CPU has a benefit that, I could choose to turn off 6 cores to have it run at a higher frequency. Once the project is finished and we no longer need that much core but higher frequency, this could be really helpful.

Memory

The analysis servers are high-performance servers, and we need to maximize their performance. It is hard to test how memory performance relates to our analysis pipeline, so I choose to maximize it. 8269CY supports hexa-channel DDR4, and I choose to assign 12 * 32 DDR4 memory modules for each server (6 for each CPU).

The reason that I use 32GB modules here is that once the server retires from this project, it is likely to be turned into a hypervisor due to its core counts. It is unlikely for us to have another project that has such high requirements, and a hypervisor is a common guess. Based on my experience, memory is one of the most important factors for hypervisors. I can easily over-allocate CPUs for VMs because it is unlikely for them to be at peak requirement at the same time. But a lot of applications still require full memory even in standby mode, and some OS like Windows will lock all the memory assigned to it even if they were not used within the OS. Since the memory slot for each server is limited(24 for R740), I need to increase the single-module capacity to have a bigger overall possible capacity.

SSD

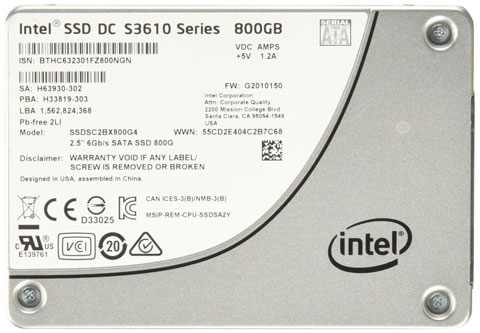

While the file is being processed, it is likely for it to be read and the result to be written to the disk at the same time. Also, it is possible for the server to pre-fetch the next file and store the previous result in a remote location, and we have 17 jobs at the same time. Therefore, we need to have an SSD array large enough. In case of a drive failure, and the data on the analysis server is lost, we can easily restart analysis as the source is stored on the storage nodes. 5200 ECO would be sufficient for this purpose. However, considering the potential usage of the server as a hypervisor, I choose the Intel S3610 800G for it's better durability.

For simplicity, I use the same disk model: 6 * Intel S3610 800G SSD.

| Model | CPU | CPU Spec | Memory Spec | HDD Spec | HDD Count | SSD Spec | SSD Count |

|---|---|---|---|---|---|---|---|

| DELL R740 | Dual Intel Xeon Platinum 8269CY | 26 * 2.5-3.8G Core per CPU | 12 * 32G DDR4 @2133Mhz | N/A | 0 | Intel S3610 800G | 6 |

Tape Library

Based on the price, HP MSL 2024 with LTO 6 tape drive was selected. I chose to have a fiber optical LTO 6 drive instead of a SAS cable one. Unlike SAS cable, the fiber model uses LC-LC fiber for data transmission. LC-LC fibers are very common for optical LAN and are also what I planned to use. If in the future I need to move the tape library away from the server that controls it, it will be easy for me to find replacement LC-LC fibers or connectors. If I use SAS cable, I will need to buy a longer cable, which might even not be practical when the distance is far away.

MSL 2024 can keep at most 24 tapes in the library before replacing them. For the tapes, I decided to purchase 100 tapes (~4 replacement) and see from there.

Network

Switch

As we discussed before, an Arista 7050QX-32 switch will be used as the core switch. This switch features QSFP+ 40G ports and each QSFP+ can be easily split into 4 * 10G SFP+ ports. However, both QSFP+ and SFP+ are usually used for fiber transceivers, the RJ45 module for this port is very expensive. We still have a lot of conventional RJ45 devices in the network(like the IPMI management port for each server), and this could be very costly. Also, having a 1G RJ45 module occupy a 40G port seems to be a great waste. Therefore, we need another switch.

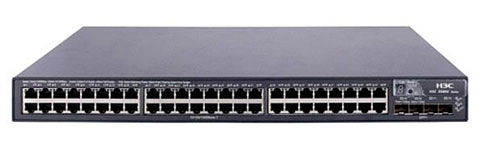

The second switch aims to provide access to all RJ45 devices. Since it is unlikely for those devices to connect to each other (those are likely end-point devices that need to access bare-metal servers, and those servers are connected directly to 40G ports), we need a sufficient fast uplink. Also, this switch needs to be a managed model, in case we need VLAN in the future. After online searches, I found an old H3C S5200 managed switch. This one features 48 * 1G RJ45 ports and 4 * SFP+ 10G ports that can be aggregated into one single 40G uplink.

Gateway

Initially, we only have a 500Mbps uplink, which makes a gateway router that has 1G port sufficient, and this router will be connected to our second switch. By the time of writing, I had upgraded the internet connection to be 2 * 1G link, and the gateway was replaced by a dedicated R630 server with a 10G LAN port. For more information about upgraded network, please refers to datacenter - network.

NIC

Once we have the 40G switch, we also need to find a 40G NIC for each server. I've found Mellanox MCX354A-FCBT (ConnectX-3 HP OEM) to be a great deal. Each card has two 40G/56G QSFP+ ports and the price is affordable. This is a dual-mode NIC. When running in ethernet mode, it can support up to 40GB. When running in InfiniBand mode, it can support up to 56 GB, and each port can have a different configuration. Although I don't need Infiniband for now, this provides me the possibility to run Infiniband in the future at a lower cost.

Fiber/Cable/Tranceivers

Once the number of servers and network equipment were set, I calculated the number of network fiber/cables needed. The result is as follows. Note that this is only a small part of the cables used in the entire project. It is common when you actually need a different model/length during installation, and the future changes were not included.

| Type | Model | Spec | Length | Number | Usage | Notes |

|---|---|---|---|---|---|---|

| Tranceiver | Finisar | QSFP-40G-SR4 | N/A | 26 | End Point | N/A |

| Tranceiver | Finisar | SFP 1G | N/A | 1 | Tape Library Connection | N/A |

| Fiber | LC-LC | N/A | 3M | 1 | Tape Library Connection | N/A |

| Fiber | MPO OM4 | 12 Core Male-Male | 3M | 17 | Server Connection | N/A |

| Fiber | MPO OM4 | 12 Core Female-Male | 3M | 7 | Fiber Extension | N/A |

| Fiber Coupler | MPO | N/A | N/A | 10 | Fiber Extension | N/A |

| Network Cable | RJ45 | CAT5e Yellow | 3M | 13 | IPMI Connection | N/A |

| Network Coupler | RJ45 | CAT5e | N/A | 10 | Network Cable Extension | N/A |

| Switch | Arista | 7050QX-32 | N/A | 1 | Core Switch | N/A |

| Switch | H3C | S25000 | N/A | 1 | Switch | N/A |

| Gateway | x86 | OpenWRT | N/A | 1 | Internet & Firewall | N/A |

| NIC | Mellanox | MCX354A | N/A | 11 | Server Connection | N/A |

| NIC | LPE12002 | 8G HBA | N/A | 1 | Tape Library Connection | N/A |

List of Hardware

Due to space limitations and also ease of description, the complete list of hardware/equipment will be available in the Installation section.