Preliminary - Resource Estimation

Introduction

We already had the performance statistics, and now it's time to calculate the total hardware resource.

This section contains Five parts: Analysis Server, Compression Server, Storage Server, Tape Library, and Network. The general server, given its flexibility, will be discussed in the hardware section.

Analysis Server

Analysis servers are used for genetic analysis and have a high requirement for their CPU performance. Assuming the processing time is directly proportional to the file size, after performing several tests, a 3.3G core can process about 2.6G per day.

Performance Analysis

Since the 3.3Ghz core we used for testing has a relatively old architecture (E7-8895v2) and also on top of a level of virtualization, a newer architecture like Intel Xeon 8200 series @3.0Ghz without virtualization should have a similar performance with a lower frequency, given the improvement of IPC for newer architecture.

Assuming each hyper-thread could act as 0.7 core, a server with 50 physical cores and 100 threads(50 cores and 50 hyper threads) can process up to 220G per day. Consider interleaved-ended files(which double the sizes but does not increase the processing time), 3 servers with a total of 660GB per day should be enough for a total of 500 -700 GB of data per day.

Memory Requirement

Due to the long running time, I didn't record all memory usage at different stages. However, I checked for ~10 times in the middle and didn't notice significant memory usage.

Storage Requirement

Based on previous estimations, each file can occupy at most 7 threads. Therefore, ~100 threads can handle about 15 files processing at the same time. Considering a slight overallocation to increase the efficiency, we could process at most 17 files simultaneously. A single file can usually be up to 20GB, and an interleaved-ended sequence can have a pair of 20GB files. In worst cases, we could have 17 * 20 * 2 = 680 GB processed on the server at the same time. Considering the optimization strategy like prefetch or delayed write back, we should have at least 680 * 3 = ~2100 GB space on each analysis server.

Conclusion

Based on the rough estimation, we might need at least 3 high-performance servers each has at least 50 cores with "not-out-of-date" architecture. The specific hardware will be discussed in a later chapter.

Later, the total amount of data increased to 2000TB, and we needed to process ~2TB per day. Luckily, the efficiency of the analysis script I developed is unexpectedly good where 2 servers of above mentioned could process over 2TB per day. These will be discussed later, for now, we will stay with our 3 server estimations.

Compression Server

File compression is a CPU-intensive task, and it can usually saturate all the cores. Therefore, it makes no sense to assign compression and other tasks on the same machine. Considering the data size we need to process each day, dedicated compression servers were needed.

Compressing Strategy

Before any hardware can be decided, we need to choose how we want to compress the file. We need to find a balance between the computational power, which is directly related to server and electricity cost, and the compressed size, which is related to the cost of hard drive or tape.

The source file we have ends in ".fastq.gz", which is basically gzip archives. we have to decide if we need to unzip the file, and which format & compressing strategy should be used. Or maybe we could apply the compressing directly on top of the gz archive without a decompression first? Or maybe we don't even need an additional layer of compression.

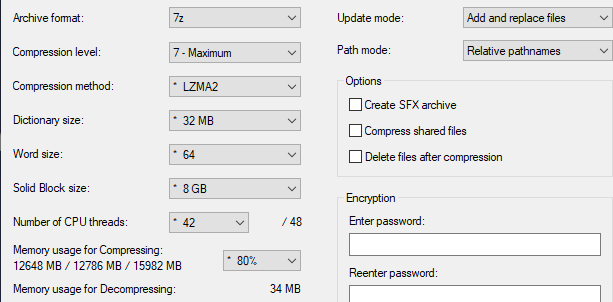

By the time of the project, 7zip supports seven formats: 7z, bzip2, gz, tar, wim, xz, and zip. Based on past experience, 7z should be used due to its high compression ratio. If the source is not gz archive, we can also use zip. But in our case, for now, zip generally couldn't provide a better ratio than gz format which the source already has. For 7z, there are 6 compression levels: store, fastest, fast, normal, maximum, and ultra. In order to find the best one, several experiments were performed.

Given the expensive cost of newer generation platforms, I decided to use older platforms like C612(E5 V3 V4 CPUs). As I already have several servers running, I can easily perform the tests.

Below are partial results of the experiment.

| Total Size | Sample Size (Before Decompression) | Core Used | Unit Time (Per Sample) | Operation | Total Core | Total Data Processed (Unit Time) | Total Time(s) | File Size (After Compression) |

|---|---|---|---|---|---|---|---|---|

| 500G | 3.97G | 1 | 105s | Decompression | 36 | 36*3.97=142G | 500/142*105=370S | N/A |

| 700G | 3.97G | 1 | 105s | Decompression | 36 | 36*3.97=142G | 700/142*105=518S | N/A |

| 500G | 3.97G | 32 | 1254s | Compression(Ultra) | 32 | 3.97G | 500/3.97*1254=157834S=43H | 2.82G |

| 700G | 3.97G | 32 | 1254s | Compression(Ultra) | 32 | 3.97G | 700/3.97*1254s=61H | 2.82G |

| 500G | 3.97G | 16 | 1945s | Compression(Ultra) | 32 | 7.94G | 500/7.94*1945s=34H | N/A |

| 700G | 3.97G | 16 | 1945s | Compression(Ultra) | 32 | 7.94G | 700/7.94*1945s=47H | N/A |

| 500G | 3.97G | 16 | 1200s | Compression(Normal) | 32 | 7.94G | 500/7.94*1200S=21H | N/A |

| 700G | 3.97G | 16 | 1200s | Compression(Normal) | 32 | 7.94G | 700/7.94*1200S=30H | N/A |

| *500G | 3.97G | 48 | 1052s | Compression(Max) | 48 | 3.97G | 500/3.97*1052=36H | 2.90G |

| 500G | 3.97G | 48 | 796s | Compression(Normal) | 48 | 3.97G | 500/3.97*756=27H | 3.24G |

| 500G | 3.97G | 16*2 | 1200s | Compression(Normal) | 32 | 7.94G | 500/7.94*1200=21H | 2.90G |

Capacity Requirement

Due to the data size that each server needs to process each day, at least 700 * 3 = 2100 GB should be available on the storage server. This will provide the space for about 3 batches of data.

Conclusion

Based on the results collected, we will apply the compression directly on the source without decompression first. We found using "Maximum" compression of 7z provides us an ideal balance between computational power and the number of equipment. Using this plan, each server of 48 logical cores is able to process 500GB every 36H and 700GB every ~50H. Given that we don't always have 700G each day, having two servers dedicated to compression should be just right.

Storage Server

Total Amount of Storage

Apparently, hard drives shouldn't be used to store all the source files. Instead, we use them to store temporary data. For data that haven't finished downloads, are pending analysis, are in an error state, or are pending compression, they need to be stored in the hard drive.

Given the fact that deletion is a sensitive operation, and we probably won't do it every day or automatically, the storage space has to be large enough to accommodate different situations. A safe estimation is to have at least 40 TB of space.

RAID Card

Unlike analysis or general servers, storage server needs to be able to provide a reliable file service. Therefore, the performance of the RAID card will be crucial, and it will need to be capable of creating a RAID 6/10 array, which we are likely to use. I choose to have HP P840 12G RAID controller with 4G on-board memory. This controller should be powered enough to accommodate our disk array with 11 disks.

Server Options

The server should be able to provide 40TB of storage space, which is not hard. The details of the storage servers will be discussed later in the structure section, due to factors that are outside of this project.

Tape Library

Based on the initial searches, it seems that the LTO6 tape system is what we can afford for a total of 430TB of data. Also, we don't have to purchase all the tapes at once. We can buy tapes as more data is available for storage. For now, we only need to find the most cost-efficient model.

The Library

Given the small market that the tape system has, it is not hard to find the ideal(cheapest) model. As a result, an HP MSL2024 library with an LTO6 tape drive was selected.

Network

This section only considers the number/model/type of the primary equipment like switches or gateway. For components like transceivers or fiber cables, they will be discussed in "Installation".

Core Switch

We want a 10G model as our core switch. First of all, I ruled out those that have 10G RJ45 ports primarily. Network cables can be unreliable and expensive on a 10G link, and 10G RJ45 NICs are also more expensive than 10G SFP+ NICs. Therefore, we want a 10G fiber switch. Note that a 10G fiber link is not necessarily an SFP+ link. I chose to use SFP+ because it is the most prevalent model.

Soon after I began my searches, I found a 40G core switch that has 32 40G QSFP+ fiber ports(Arista 7050QX-32). Each 40G QSFP+ port can be split into 4 * 10G SFP+ ports without additional converters but a single fiber cable. What actually surprises me is that this one is even cheaper than those 10G switches.

Therefore, we had a 40G switch.

Gateway

It is hard for us to afford dedicated equipment like a hardware firewall, so I decided to have a "software router" as the gateway, which I can customize a lot. The gateway chosen was initially an embedded x86 system that had OpenWRT installed by the time the project started. It was later replaced by a dedicated DELL R630 server during the migration to my mini data center. For more information about the updated gateway, please refers to datacenter - network.